U.S.A. Presidential Vocabulary

- Alex Ricciardi

- Dec 12, 2020

- 9 min read

Updated: Dec 14, 2020

My Codecademy portfolio project from the Data Scientist Path Natural Languages Processing (NLP) Course, Word Embeddings Section.

▪ Overview

Whenever a United States of America president is elected or re-elected, an inauguration ceremony takes place to mark the beginning of the president’s term. During the ceremony, the president gives an inaugural address to the nation, dictating the tone and focus of the next four years of leadership.

In this project you will have the chance to analyze the inaugural addresses of the presidents of the United States of America, as collected by the Natural Language Toolkit, using word embeddings.

By training sets of word embeddings on subsets of inaugural address versus the collection of presidents as a whole, we can learn about the different ways in which the presidents use language to convey their agenda.

▪ Project Goal:

Analyze USA presidential inaugural speeches using NLP word embeddings models.

▪ Project Requirements

Be familiar with:

Python3

NLP (Natural Languages Processing

The Python Libraries:

re

Pandas

Json

Collections

NLKT

Sklearn

Link:

The Data

The project corpus data can be freely downloaded from NLTK Corpora under the designation "68. C-Span Inaugural Address Corpus".

▪ The Corpus:

The corpus is the collection of president inaugural speeches from 1789-Washington to 2016-Trump, the data making the corpus is provided as text files.

Text file name sample:

I merged the text files into one python list of objects called speeches.

Sample speeches: 1793-Washington's speech

'Fellow citizens, I am again called upon by the voice of my country to execute the functions of its Chief Magistrate. When the occasion proper for it shall arrive, I shall endeavor to express the high sense I entertain of this distinguished honor, and of the confidence which has been reposed in me by the people of united America.\n\nPrevious to the execution of any official act of the President the Constitution requires an oath of office. This oath I am now about to take, and in your presence: That if it shall be found during my administration of the Government I have in any instance violated willingly or knowingly the injunctions thereof, I may (besides incurring constitutional punishment) be subject to the upbraidings of all who are now witnesses of the present solemn ceremony.\n\n \n'We can see from the speeches corpus sample that the above text is not clean. It can not be processed properly by a NLT model, speeches is the project un-preprocessed corpus .

Preprocessed Corpus

Before a text can be processed by a NLP model, the text data needs to be preprocessed.

Text data preprocessing is the process of cleaning and prepping the text data to be processed by NLP models.

Cleaning and prepping tasks:

Noise removal is a text preprocessing step concerned with removing unnecessary formatting from our text.

Tokenization is a text preprocessing step devoted to breaking up text into smaller units (usually words or discrete terms).

Normalization is the name we give most other text preprocessing tasks, including stemming, lemmatization, upper and lowercasing, and stopwords removal.

- Stemming is the normalization preprocessing task focused on removing word

affixes.

- Lemmatization is the normalization preprocessing task that more carefully brings

words down to their root forms.

▪ Tokenization

In this project, I broke down the presidential speeches into words on a sentence by sentence basis by using the sentence tokenizer nltk.tokenize.PunktSentenceTokenizer().

▪ Removing stopwords

I used the stopwords list from nltk.corpus.stopwords to remove stopwords from the corpus.

▪ Lemmatization

I used nltk.stem.WordNetLemmatizer.lemmatize() function to lemmatized the corpus.

▪ Part-of-Speech Tagging

To improve the performance of lemmatization, each word in the processed text is assigned parts of speech tag, such as noun, verb, adjective, etc. The process is called Part-of-Speech Tagging.

To tag the corpus words, I used the lexical database of English from nltk.corpus.reader.wordnet data and the nlt.corpus.reader.wordnet.synsets() function.

▪ The 'us' word

The word 'us' is a commonly used word in presidential inauguration addresses.

The result of preprocessing the word 'us' through lemmatizing with the part-of-speech tagging method nlt.corpus.reader.wordnet.synsets() function and in conjunction with stopwords removal and the nltk.stem.WordNetLemmatizer().lemmatize() method, is that the word 'us' becomes 'u'.

This happens because the lemmatize(word, get_part_of_speech(word)) method removes the character 's' at the end of words tagged as nouns. The word 'us', which is not part of the stopwords list, is tagged as a noun causing the lemmatization result of 'us' to be 'u'.

The following lines of code solves this issue.

# ---------------- Removes stopwords from sentences

sentence_no_stopwords = [word for word in word_tokenized_sentence \

if word not in stop_words]

# ---------------- Before lemmatizing, adds a 's' to the word 'us'

word_sentence_us = ['uss' if word == 'us' else word \

for word in sentence_no_stopwords]

# ---------------- Lemmatizes

word_sentence = [normalizer.lemmatize(word, get_part_of_speech(word)) \

for word in word_sentence_us] The preprocessed presidential speeches were stored within a python list of objects called preprocessed_speeches, and saved within the corresponding text file, preprocessed_speeches.txt.

Sample preprocessed_speeches:

Words list from the second speech first sentences, from 1793-Washington's speech.

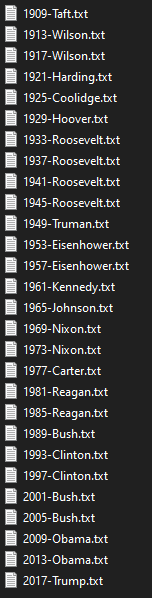

['fellow', 'citizens', 'called', 'upon', 'voice', 'country', 'execute', 'functions', 'chief', 'magistrate']I stored the preprocessed presidential speeches lists into a DataFrame called df_presidents_speeches, and saved it within the corresponding comma-separated values file, presidents_speeches.csv

Sample df_presidents_speeches:

Word Embeddings

Word embeddings are a type of word representation that allows words with similar meaning to have a similar representation. In NLP words are often represented as numeric vectors, the algorithms used to vectorize words are referred to as "words to vectors" (word2vec).

Word vector sample:

us

[-0.14337042 -0.42002755 0.04444088 0.16094017 -0.20708892 -0.1110167

-0.10896247 -0.00725871 -0.28715938 -0.05983362 -0.03677864 -0.2339048

-0.1463677 -0.02352026 -0.2253138 -0.03972395 -0.10435399 -0.0910004

-0.04984815 0.19013952 0.07048502 -0.09880995 0.19615257 0.1965861

-0.0792985 0.01203563 -0.11549533 0.17788032 -0.22004314 -0.1917008

0.14149937 0.15478796 0.5355729 -0.06770235 -0.19444901 -0.2677069

0.0494894 -0.10535853 0.10590784 -0.19706418 0.30547538 -0.0852098

0.03505427 0.05895421 -0.04728445 -0.15666784 -0.02900041 -0.2637179

-0.11152758 0.08258722 0.31440705 -0.00491959 -0.12276714 -0.0592019

0.08757532 0.04843157 0.02039615 -0.10374659 0.03611287 0.0840925

0.16862065 0.12372146 -0.1518512 -0.21229784 -0.23986538 0.2471761

-0.00701786 -0.13091983 0.2565383 -0.02272793 0.06017233 -0.0268575

-0.1872175 -0.31481022 -0.16351888 0.3875946 -0.14306375 -0.1065706

-0.06812624 0.34567258 0.10388853 -0.09119318 0.00772498 -0.0865779

-0.02311127 -0.0570781 -0.07193686 0.04780634 -0.31940058 0.0482250

-0.01591856 0.18802257 -0.22996111 -0.01813974 0.02495508 -0.295896]Using the word vectors we can calculate the cosine distances between the vectors to find out how similar terms are within the USA presidential inaugural speeches context.

▪ All Presidents

Analysis of the presidential vocabulary by looking at all the inaugural addresses.

Most frequently used terms:

The following list of words are the ten most frequently used presidential inauguration speech terms.

The numeric values represent the number of times the corresponding words appear in the combined presidential inauguration speeches.

[('government', 651),

('people', 623),

('nation', 515),

('us', 480),

('state', 448),

('great', 394),

('upon', 371),

('must', 366),

('make', 357),

('country', 355)]The following list of words are the three most frequently used presidential inauguration speech terms.

['government', 'people', 'nation']From the three most frequently used term results, we can see that the main topic within the combined inaugural addresses seem to be centered around the words government, people and nation.

Word2Vec, word embeddings model:

The idea behind word embeddings is a theory known as the distributional hypothesis. This hypothesis states that words that occur in the same contexts tend to have similar meanings. Word2Vec is a shallow neural network model that can build word embeddings using either continuous bag-of-words or continuous skip-grams.

The word2vec method that I used to create word embeddings is based on continuous skip-grams. Skip-grams function similarly to n-grams, except instead of looking at groupings of n-consecutive words in a text, we can look at sequences of words that are separated by some specified distance between them.

For this project, I created a word embeddings model using the skip-grams word2vec model, within the USA presidential inaugural speeches context.

I used models.Word2Vec() from the gensim python library, to create a word embeddings model.

Note:

Machine learning models will produce different results on same dataset, the models generate a sequence of random numbers called random seed used within the process of generating test, validation and training datasets from a given dataset. Configurating a model's seed to a set value will ensure that the results are reproducible.

The python library gensim relies on different processes to initialize and train its word embeddings model class, if you need to generate more consistent results (not recommended), click on the following link:

Code sample:

word_embeddings = gensim.models.Word2Vec(all_sentences, size=96,

window=5, min_count=1,

workers=2, sg=1)Similar terms:

Using the word vectors created by word embeddings model, we can calculate the cosine distances between the vectors to find out how similar terms are within the USA presidential inaugural speeches context.

Similar terms sample:

The ten most similar terms to the word government, (within the USA presidential inaugural speeches context).

The numeric values represent the vectors' cosine distance between the term government and the ten most similar terms to the word government.

government

[('power', 0.9975095987319946), ('federal', 0.9974785447120667), ('authority', 0.9967800974845886), ('within', 0.9962350130081177), ('support', 0.9957302808761597), ('law', 0.9954397082328796), ('territory', 0.9951248168945312), ('defend', 0.994941771030426), ('protect', 0.9949225783348083), ('reserve', 0.9948856830596924), ('respect', 0.9948171377182007), ('grant', 0.9948017597198486), ('general', 0.9947109818458557), ('exercise', 0.9946185350418091), ('union', 0.9943878054618835), ('principle', 0.9943051934242249), ('right', 0.9942262172698975), ('executive', 0.9942165017127991), ('limit', 0.9941080808639526), ('preserve', 0.993848443031311)]The list of the similar terms with no cosine distance:

government

['power', 'federal', 'authority', 'within', 'support', 'law', 'territory', 'defend', 'protect', 'reserve', 'respect', 'grant', 'general', 'exercise', 'union', 'principle', 'right', 'executive', 'limit', 'preserve']From the presidential inaugural addresses data and by training a word embeddings model with it, I was able to create a some what accurate U.S.A. presidential vocabulary, the small size of corpus limits how efficiently the model can be trained, nonetheless the model gives us good insight into how terms are connected to each other within the presidential inauguration addresses context.

▪ One President

Analysis of a president's vocabulary by looking at his inaugural addresses.

One president's most frequently used terms:

The following lists of words are the ten most frequently used terms by the presidents Madison and Bush respectively.

Once more, the numeric values represent the number of times the corresponding words appeared in each of the president's inauguration speeches.

madison

[('war', 17), ('nation', 13), ('country', 11), ('state', 10), ('public', 8), ('unite', 8), ('right', 7), ('every', 7), ('without', 6), ('long', 6)]

bush

[('america', 38), ('freedom', 38), ('nation', 37), ('us', 27), ('time', 24), ('american', 23), ('make', 22), ('work', 21), ('great', 21), ('world', 21)]The following lists of words are the three most frequently used terms by the presidents Madison and Bush respectively.

madison

['war', 'nation', 'country']

bush

['america', 'freedom', 'nation']Similar terms:

The ten most similar terms to the word government, (within a president's inaugural speeches context).

Once more, the numeric values represent the vectors' cosine distance between the term government and the ten most similar terms to the word government.

Within the inaugural speeches of president Madison:

madison's government

[('try', 0.30390772223472595), ('direct', 0.3015757203102112), ('susceptible', 0.2710644602775574), ('warfare', 0.26841405034065247), ('cultivate', 0.26799431443214417), ('triumph', 0.2613541781902313), ('speak', 0.2577613890171051), ('costly', 0.2402854859828949), ('trespass', 0.23802343010902405), ('resheathed', 0.23120231926441193), ('avow', 0.22971150279045105), ('station', 0.2235976606607437), ('bind', 0.2205573469400406), ('flash', 0.2196066975593567), ('fail', 0.21351571381092072), ('deep', 0.20669057965278625), ('insurrectional', 0.20200034976005554), ('reserve', 0.20102760195732117), ('discussion', 0.19728434085845947), ('bosom', 0.19643764197826385)]Within the inaugural speeches of president Bush:

bush's government

[('speak', 0.3728598356246948), ('courage', 0.3562648296356201), ('deep', 0.34997132420539856), ('citizen', 0.3480147421360016), ('dignity', 0.3403896689414978), ('promise', 0.33718132972717285), ('right', 0.334867924451828), ('nation', 0.33438464999198914), ('subject', 0.3293880820274353), ('us', 0.3235391080379486), ('time', 0.31916359066963196), ('world', 0.31477758288383484), ('come', 0.31368908286094666), ('strive', 0.31293532252311707), ('triumph', 0.3103782534599304), ('men', 0.31022849678993225), ('liberator', 0.3014175593852997), ('day', 0.2934373617172241), ('friend', 0.2926769554615021), ('senator', 0.29224398732185364)]The cosine distance values of the most similar terms for both presidents are under 0.4, the results are less than satisfying due to the small size of the corpus (inaugural speeches given only by one president) used to train the word embeddings models.

The lists of the similar terms with no cosine distance :

madison's government

['try', 'direct', 'susceptible', 'warfare', 'cultivate', 'triumph', 'speak', 'costly', 'trespass', 'resheathed', 'avow', 'station', 'bind', 'flash', 'fail', 'deep', 'insurrectional', 'reserve', 'discussion', 'bosom']

bush's government

['speak', 'courage', 'deep', 'citizen', 'dignity', 'promise', 'right', 'nation', 'subject', 'us', 'time', 'world', 'come', 'strive', 'triumph', 'men', 'liberator', 'day', 'friend', 'senator']Presidents' Vocabularies DataFrame:

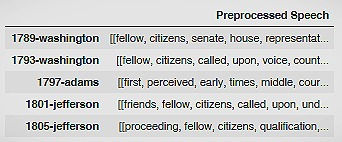

I created presidents' vocabularies DataFrame which takes the president names as the index and takes the values of the three and the ten most recurrent terms lists specific to each president, and the values of the vocabulary of terms lists specific to each president.

DataFrame sample:

▪ Selection of Presidents

We can analyze further, using word embeddings, the presidential vocabulary by combining the first five US presidents' inaugural speeches and compare the results with the results from last five US presidents' inaugural speeches.

Most frequently used terms:

The following lists of words are the ten most frequently used terms by the combined first and the combined last five presidents respectively.

First Five Presidents

[('government', 105), ('state', 103), ('nation', 81), ('great', 75), ('may', 69), ('citizen', 66), ('country', 65), ('people', 64), ('war', 61), ('public', 60)]

Last Five Presidents

[('us', 176), ('america', 111), ('must', 105), ('nation', 104), ('world', 101), ('new', 101), ('american', 95), ('time', 91), ('people', 90), ('freedom', 81)]The following lists of words are the three most frequently used terms by the combined first and combined last five presidents respectively.

First Five Presidents

['government', 'state', 'nation']

Last Five Presidents

['us', 'america', 'must']Similar terms:

The ten most similar terms to the word government, (within a selection of presidents' inaugural speeches context).

Once more, the numeric values represent the vectors' cosine distance between the term government and the ten most similar terms to the word government.

Similar terms sample:

First five presidents government

[('great', 0.9993625283241272), ('would', 0.9993107318878174), ('every', 0.999304473400116), ('war', 0.9993011355400085), ('state', 0.999292254447937), ('nation', 0.9992531538009644), ('us', 0.9992316365242004), ('right', 0.9992256760597229), ('country', 0.9991927742958069), ('union', 0.999192476272583), ('public', 0.9991827011108398), ('duty', 0.9991818070411682), ('make', 0.999152421951294), ('may', 0.9991515278816223), ('citizen', 0.9991361498832703), ('principle', 0.9991114139556885), ('party', 0.9990929961204529), ('year', 0.9990882277488708), ('peace', 0.9990814924240112), ('place', 0.9990769624710083)]

Last five presidents government

[('us', 0.9995543956756592), ('world', 0.9995338916778564), ('must', 0.9994977712631226), ('promise', 0.9994778037071228), ('child', 0.9994691014289856), ('citizen', 0.9994655847549438), ('every', 0.9994533061981201), ('nation', 0.9994449615478516), ('right', 0.9994438886642456), ('american', 0.9994357228279114), ('great', 0.9994322657585144), ('time', 0.999428391456604), ('make', 0.9994162917137146), ('new', 0.9994096159934998), ('people', 0.9994072914123535), ('work', 0.9994065761566162), ('day', 0.9993953108787537), ('come', 0.9993935823440552), ('know', 0.9993910789489746), ('generation', 0.9993775486946106)]The cosine distance values of the most similar words are close to 1, the results are satisfying, better than ones from one president, the results are better due to the larger size of the corpus used to train the word embeddings models.

The lists of the similar terms with no distance vector:

First five presidents government

['great', 'would', 'every', 'war', 'state', 'nation', 'us', 'right', 'country', 'union', 'public', 'duty', 'make', 'may', 'citizen', 'principle', 'party', 'year', 'peace', 'place']

Last five presidents government

['us', 'world', 'must', 'promise', 'child', 'citizen', 'every', 'nation', 'right', 'american', 'great', 'time', 'make', 'new', 'people', 'work', 'day', 'come', 'know', 'generation']

Comments